Why Apple vs. FBI Matters

The Apple vs. FBI legal battle over the need to unlock a dead terrorist's iPhone has been top of mind for many IT professionals, civil rights advocates, law enforcement supporters, legal pundits, and cyber-security experts. I wasn't planning to write about this topic, given that it has been thoroughly covered elsewhere. However, our clients have been engaging in a pretty lively private debate over Apple's refusal to comply with a court order: Who is right? Apple or the FBI? And why is the battle over this one iPhone so important to Apple? And maybe to the rest of us? Here are some of the highlights from the discussion that has dominated our Pioneers' private forum over the past three weeks.

What are the Legal Issues?

Donald Callahan has done a wonderful job of researching and summarizing the legal issues. I recommend reading his Duquesne Advisory analysis of the legal arguments on both sides, entitled “Backdoor battle”: can Apple beat the FBI in the federal courts?

I found the most chilling argument in Apple's legal brief (as summarized by Donald) to be:

“If Apple can be forced to write code in this case to bypass security features and create new accessibility, what is to stop the government from demanding (more): that Apple write code to turn on the microphone in aid of government surveillance, activate the video camera, surreptitiously record conversations, or turn on location services to track the phone’s user? Nothing.”

Donald comments:

"This is an even bigger point than Apple says, not limited to phones. The real risk is turning the IoT (Internet of Things) into an IoS (Internet of Surveillance), through new cases that leverage the precedent to expand government authority to force technology companies to create special software to help them hack into anything and everything."

"Presumably, the surveillance-enabled code would be distributed to unwitting devices in the form of automatic software updates, which would of course, have to be digitally signed by the software company."

"As New York federal Judge James Orenstein wrote in a very recent, similar AWA (All Writs Act) case won by Apple: 'In a world in which so many devices, not just smart phones, will be connected to the Internet of Things, the government’s theory … will result in a virtually limitless expansion of the government’s legal authority to surreptitiously intrude on personal privacy.'"

After deftly summing up all of the key legal arguments, Donald concludes:

"Overall, Apple has a strong case. Among the detailed points, Apple’s contention that the government has not shown that the company’s help is essential to hack into an iPhone 5c without the new software looks like a 'killer argument.' "

"More broadly, a government win - in what is obviously a 'test case' - would establish an extraordinarily dangerous precedent for conscripting, not just technology companies, but ordinary citizens, to do anything and everything considered 'necessary' in an investigation."

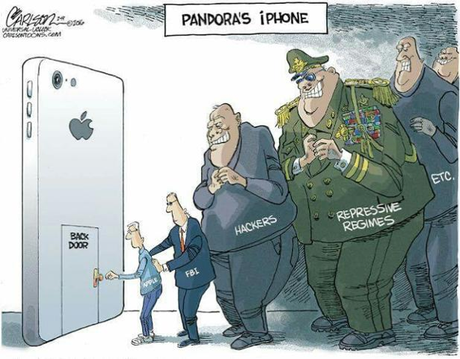

"If Apple does finally lose in the federal courts, there could well be a number of unpleasant consequences, including:

-

Authoritarian regimes will demand the same thing

-

Cyber-criminals will get hold of the technology for their own use

- US local, state and federal authorities will obtain sweeping orders for “assistance” from technology companies

-

With successive similar cases, the Internet of Things – which already has plenty of security issues – will also become the Internet of Surveillance."

In addition to Donald's legal summary, Peter Horne posted a copy of the Amicae Curiae Brief that was filed by the Electronic Frontier Foundation and 46 technologists, researchers, and cryptographers. That brief begins with this sentence:

"What the government blandly characterizes as a request for technical assistance raises one of the most serious issues facing the security of information technology: the extent to which manufacturers of secure devices like Apple can be conscripted by the government to undermine the security of those devices."

Here are the four things I found particularly interesting in the Electronic Frontier Foundation (EFF) brief:

- That the order "requires Apple to write code that will undermine several security features it intentionally built into the iPhone and then to digitally sign that code to trick the phone into running it."

- That digital signatures are used by Apple "to ensure the authenticity, integrity, and, as appropriate, the confidentiality" of an electronic record. "In the context of software updates for computers and smartphones, digital signatures ensure a person downloading the update that he or she is receiving it from the trusted source....When Apple signs code, its digital signature communicates Apple’s trust in that code. The signature is its endorsement and stamp of approval that communicates the company’s assurance that each and every line of signed code was produced by or approved by Apple."

- "The Order also compels Apple to have its programmers write code that will undermine its own system, disabling important security features that Apple wrote into the version of iOS at issue....By compelling Apple to write and then digitally sign new code, the Order forces Apple to first write a message to the government’s specifications, and then adopt, verify and endorse that message as its own, despite its strong disagreement with that message. The Court’s Order is thus akin to the government dictating a letter endorsing its preferred position and forcing Apple to transcribe it and sign its unique and forgery-proof name at the bottom."

- "The compelled speech doctrine prevents the government from forcing its citizens to be hypocrites....It makes no difference that Apple's edited code and signature will communicated only to the government or internally."

Peter Horne comments:

"The EFF gets the issue correct; that it is about Apple's digital signature...

"The EFF does not, however, raise the issue that the end user has no choice but to accept everything signed by Apple.

It is completely correct to say Apple controls the key. My contention is that even if never used, the owner of the phone should always be free to choose when, or if, they trust any key. That way the responsibility is with the owner, not the manufacturer. If Apple didn't want to retain that right as part of its business model, it would help prise open the keys from the dead terrorist's hands because it would be the dead terrorist's responsibility to keep the phone private, not Apple's.

So, Pete is pointing out that Apple's business model--of controlling the operating system, all the apps, and the end-to-end encryption--make Apple vulnerable to a criminal justice request that it turn over the key to unlock all the user's encrypted information.

What are the Ethical Pros & Cons?

Both Donald Callahan and Scott Jordan seem to feel that, if Apple were to comply with the FBI's request to write special software to unlock a customer's password protected encrypted information and to digitally sign that software, it would establish a dangerous precedent. They (and many others) seem to feel that once this iPhone unlocking tool exists, it will fall into the wrong hands. And, having established the precedent that a device manufacturer could be made to decrypt a customer's private information, we will slide quickly into a surveillance state. The alternative is for suppliers to claim, as Apple and other companies, do, that the equipment and/or cloud supplier has no control over, nor visibility into, what the customer has stored and encrypted on its devices and in its cloud. And that only the customer herself is able to unlock the encrypted information.

Both Donald Callahan and Scott Jordan seem to feel that, if Apple were to comply with the FBI's request to write special software to unlock a customer's password protected encrypted information and to digitally sign that software, it would establish a dangerous precedent. They (and many others) seem to feel that once this iPhone unlocking tool exists, it will fall into the wrong hands. And, having established the precedent that a device manufacturer could be made to decrypt a customer's private information, we will slide quickly into a surveillance state. The alternative is for suppliers to claim, as Apple and other companies, do, that the equipment and/or cloud supplier has no control over, nor visibility into, what the customer has stored and encrypted on its devices and in its cloud. And that only the customer herself is able to unlock the encrypted information.

Scott Jordan apparently feels that the FBI is making unreasonable demands of Apple, that the FBI bungled the case to begin with, and that Apple is within its rights to want to protect its customers' information vigilantly. Without our ability to trust in Apple to protect our information, the Apple brand is devalued. In a beautifully-written Customers.com Forum post, that I have chosen to title "Apple vs. the FBI: Information as a Weapon, Scott builds up to this point:

"So now we stand on the cusp of a Constitutional crisis. On the one hand is the impenetrable hull of iPhones like yours and mine; on the other hand is an investigative agency with an agenda to secure a tool to turn any suspect's iPhone possessed by any arm of the government against its owner. Such a tool cannot be contained. Not within the FBI, not within American law enforcement, not within the United States. Not in the age of the Internet, of thumb drives the size of a lozenge. Not in the age of such governmental follies as more than two million deeply intimate dossiers of security-cleared professionals and military members exposed for years by an Office of Personnel Management run by a political hack. Not in the era of a Secretary of State who chatted over an unsecured server about the location and movements of a doomed Ambassador."

Peter Horne, however, often the contrarian, takes a different slant. Stepping above the question of whether or not Apple should be legally compelled to write software to provide a back door into its devices that government agencies could use to spy on people, Pete questions Apple's motivations. Is Apple really concerned about our privacy, or is Apple concerned about its ability to control our access to our own devices and information? In a provocative Customers.com Forum post I have titled: Apple vs. FBI: The Truth that Needs to be Known, Pete writes:

"So to me, it is a bit of a stretch for me to think that Apple is, under the leadership of Saint Tim, fighting for the the privacy of the world. No; Apple is holding the keys to all the devices it manufactures because that is its business model. It is how Steve Jobs got

record companies to sign up for iTunes, it is how Apple wants to control your interactions with its cloud, and it is how Tim Cook will eventually get cable TV shows on Apple TV. Because Apple controls the keys to your devices, it controls how, and at what price, you access your content. In the future, it also lets Apple decide in the face of falling profits to mine your data and sell it to advertisers just like Google, Facebook and Microsoft. The master key will let them do that, and I believe that the stock market will eventually demand it because that's what corporations do. Just look at Windows 10.

Now the problem for Apple is that the US government has also quite rightly identified that Apple can do whatever it wants to the devices it manufactures, because they hold the ultimate key that all Apple devices will ultimately obey. So why wouldn't the FBI want to avail themselves of the ability to use this key, the already existing back door to all Apple devices? Apple is a US company under US law; the San Bernadino guy was an abomination and there may be more, so Apple, here's the law, now get on with it. Even if the FBI wants to make its point and do it publicly and make case law rather than do it under secret laws like FISA, that is its prerogative. It's what governments do."

Pragmatic Solution?

Personally, I like Eric Castain's proposed solution:

"it seems to me that the easiest way for Apple to move forward is to simply change the install process to not only require their master key but also the end user's PIN before initiating an OS update. Yes, that will cause some percent of their customers to never bother doing an upgrade to the next version of the OS. BUT it will take the whole back door approach being advocated by the Feds off the table."

My Bottom Line

Let's not weaken end-to-end encryption by making exceptions for law enforcement. We've already seen too many examples of government overreach when it comes to invading citizens' privacy. Once any government agency demands that suppliers provide it with a means to access and decrypt their customers' private information, suppliers and their customers will move their data to more secure platforms in other countries. What does that accomplish?

0 comments

Be the first one to comment.